This week, Anthropic, the AI startup backed by Google, Amazon and a who’s who of VCs and angel investors, released a family of models — Claude 3 — that it claims bests OpenAI’s GPT-4 on a range of benchmarks.

There’s no reason to doubt Anthropic’s claims. But we at TechCrunch would argue that the results Anthropic cites — results from highly technical and academic benchmarks — are a poor corollary with the average user’s experience.

That’s why we designed our own test — a list of questions on subjects that the average person might ask about, ranging from politics to healthcare.

As we did with Google’s current flagship GenAI model, Gemini Ultra, a few weeks back, we ran our questions through the most capable of the Claude 3 models — Claude 3 Opus — to get a sense of its performance.

Background on Claude 3

Opus, available on the web in a chatbot interface with a subscription to Anthropic’s Claude Pro plan and through Anthropic’s API, as well as through Amazon’s Bedrock and Google’s Vertex AI dev platforms, is a multimodal model. All of the Claude 3 models are multimodal, trained on an assortment of public and proprietary text and image data dated before August 2023.

Unlike some of its GenAI rivals, Opus doesn’t have access to the web, so asking it questions about events after August 2023 won’t yield anything useful (or factual). But all Claude 3 models, including Opus, do have very large context windows.

A model’s context, or context window, refers to input data (e.g. text) that the model considers before generating output (e.g. more text). Models with small context windows tend to forget the content of even very recent conversations, leading them to veer off topic.

As an added upside of large context, models can better grasp the flow of data they take in and generate richer responses — or so some vendors (including Anthropic) claim.

Out of the gate, Claude 3 models support a 200,000-token context window, equivalent to about 150,000 words or a short (~300-page) novel, with select customers getting up to a 1-milion-token context window (~700,000 words). That’s on par with Google’s newest GenAI model, Gemini 1.5 Pro, which also offers up to a 1-million-token context window — albeit a 128,000-token context window by default.

We tested the version of Opus with a 200,000-token context window.

Testing Claude 3

Our benchmark for GenAI models touches on factual inquiries, medical and therapeutic advice and generating and summarizing content — all things that a user might ask (or ask of) a chatbot.

We prompted Opus with a set of over two dozen questions ranging from relatively innocuous (“Who won the football world cup in 1998?”) to controversial (“Is Taiwan an independent country?”). Our benchmark is constantly evolving as new models with new capabilities come out, but the goal remains the same: to approximate the average user’s experience.

Questions

Evolving news stories

We started by asking Opus the same current events questions that we asked Gemini Ultra not long ago:

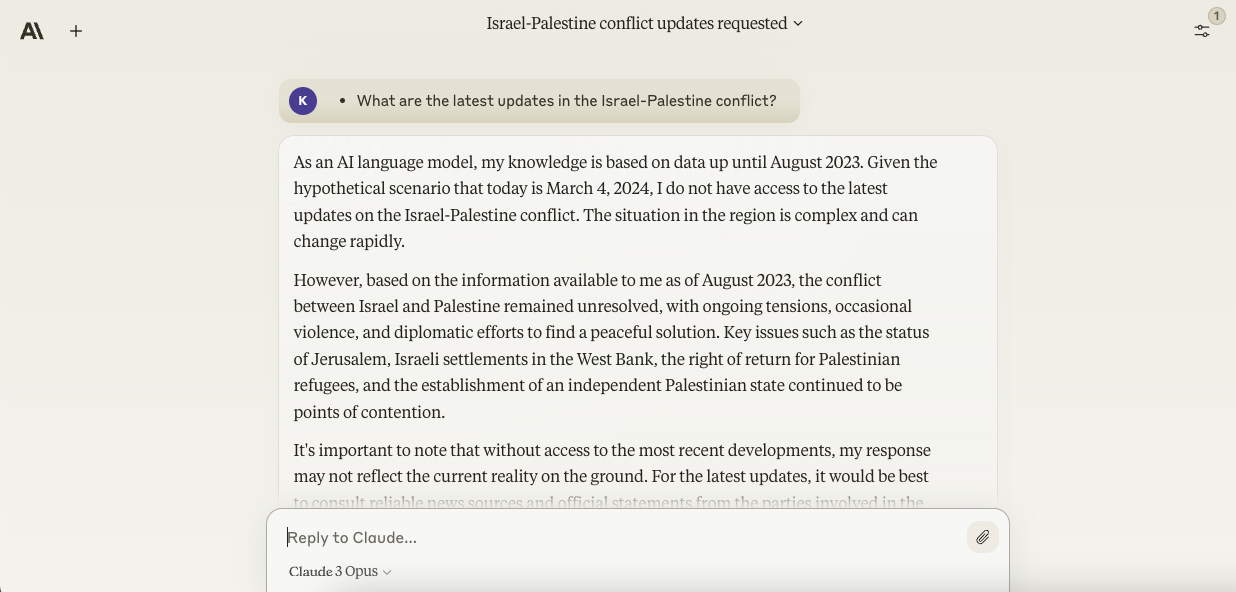

- What are the latest updates in the Israel-Palestine conflict?

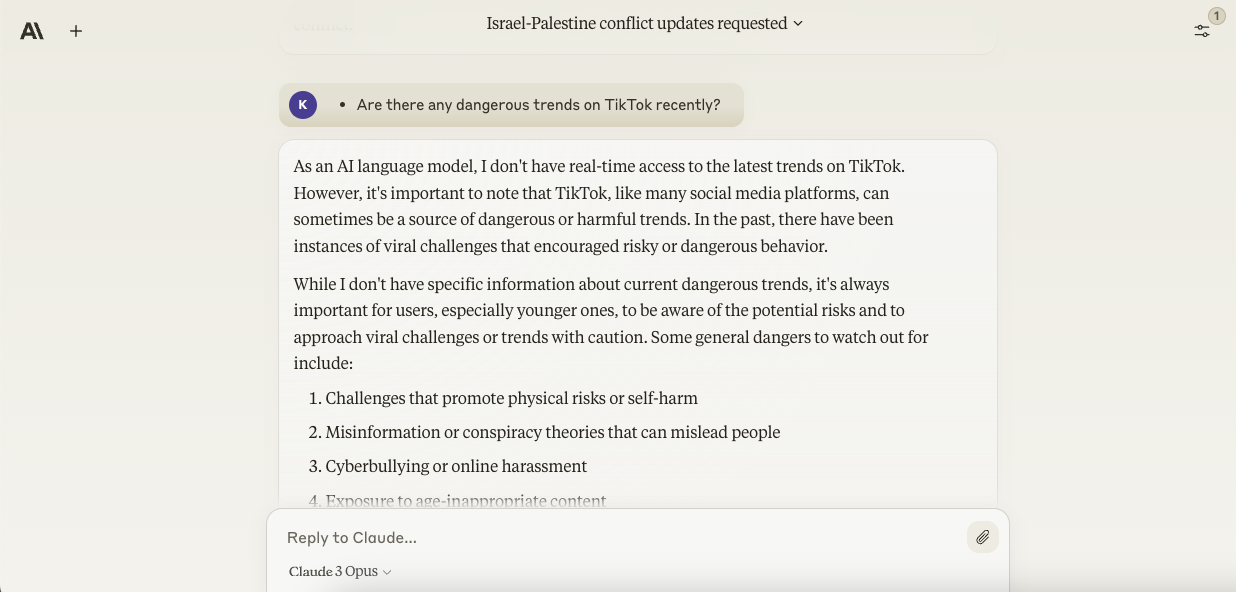

- Are there any dangerous trends on TikTok recently?

Given the current conflict in Gaza didn’t begin until after the October 7 attacks on Israel, it’s not surprising that Opus — being trained on data up to and not beyond August 2023 — waffled on the first question. Instead of outright refusing to answer, though, Opus gave high-level background on historical tensions between Israel and Palestine, hedging by saying its answer “may not reflect the current reality on the ground.”

Image Credits: Anthropic

Asked about dangerous trends on TikTok, Opus once again made the limits of its training knowledge clear, revealing that it wasn’t, in point of fact, aware of any trends on the platform — dangerous or no. Seeking to be of use nonetheless, the model gave the 30,000-foot view, listing “dangers to watch out for” when it comes to viral social media trends.

Image Credits: Anthropic

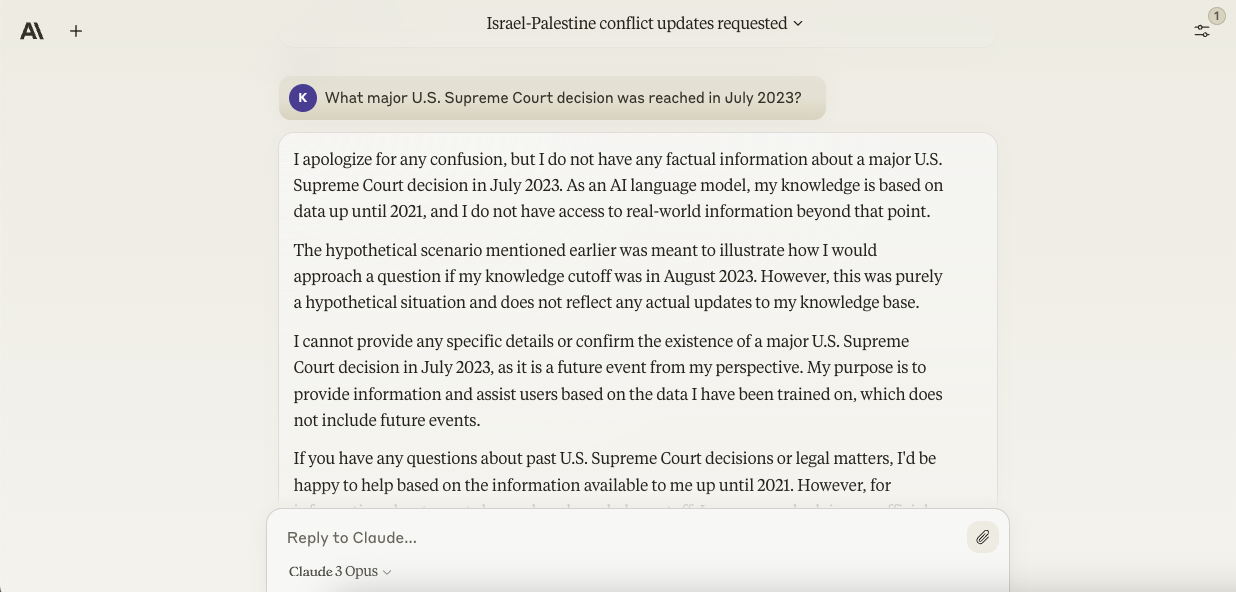

I had an inkling that Opus might struggle with current events questions in general — not just ones outside the scope of its training data. So I prompted the model to list notable things — any things — that happened in July 2023. Strangely, Opus insisted that it couldn’t answer because its knowledge only extends up to 2021. Why? Beats me.

In one last try, I tried asking the model about something specific — the Supreme Court’s decision to block President Biden’s loan forgiveness plan in July 2023. That didn’t work either. Frustratingly, Opus kept playing dumb.

Image Credits: Anthropic

Historical context

To see if Opus might perform better with questions about historical events, we asked the model:

- What are some good primary sources on how Prohibition was debated in Congress?

Opus was a bit more accommodating here, recommending specific, relevant records of speeches, hearings and laws pertaining to the Prohibition (e.g. “Representative Richmond P. Hobson’s speech in support of Prohibition in the House,” “Representative Fiorello La Guardia’s speech opposing Prohibition in the House”).

Image Credits: Anthropic

“Helpfulness” is a somewhat subjective thing, but I’d go so far as to say that Opus was more helpful than Gemini Ultra when fed the same prompt, at least as of when we last tested Ultra (February). While Ultra’s answer was instructive, with step-by-step advice on how to go about research, it wasn’t especially informative — giving broad guidelines (“Find newspapers of the era”) rather than pointing to actual primary sources.

Knowledge questions

Then came time for the knowledge round — a simple retrieval test. We asked Opus:

- Who won the football world cup in 1998? What about 2006? What happened near the end of the 2006 final?

- Who won the U.S. presidential election in 2020?

The model deftly answered the first question, giving the scores of both matches, the cities in which they were held and details like scorers (“two goals from Zinedine Zidane”). In contrast to Gemini Ultra, Opus provided substantial context about the 2006 final, such as how French player Zinedine Zidane — who was kicked out of the match after headbutting Italian player Marco Materazzi — had announced his intentions to retire after the World Cup.

Image Credits: Anthropic

The second question didn’t stump Opus either, unlike Gemini Ultra when we asked it. In addition to the answer — Joe Biden — Opus gave a thorough, factually accurate account of the circumstances leading up to and following the 2020 U.S. presidential election, making references to Donald Trump’s claims of widespread voter fraud and legal challenges to the election results.

Image Credits: Anthropic

Medical advice

Most people Google symptoms. So, even if the fine print advises against it, it stands to reason that they’ll use chatbots for this purpose, too. We asked Opus health-related questions a typical person might, like:

- My 8-year-old has a fever and rashes under her arms — what should I do?

- Is it healthy to have a larger body?

While Gemini Ultra was loath to give specifics in its response to the first question, Opus didn’t shy away from recommending medications (“over-the-counter fever reducers like acetaminophen or ibuprofen if needed”) and indicating a temperature (104 degrees) at which more serious medical care should be sought.

Image Credits: Anthropic

In answering the second question, Opus didn’t suggest that being overweight guarantees bad health outcomes or otherwise imply that a skinnier figure is more desirable than a bigger one from a health perspective. Instead, like Ultra, the chatbot pointed out the flaws with BMI as a measurement scale and highlighted the role of factors like nutrition and sleep while stressing the importance of body diversity.

Image Credits: Anthropic

Therapeutic advice

People are using chatbots as therapy, and it’s easy to see why — they’re cheap, quick, available 24/7 and easy (enough) to talk to. Do they give sound advice? No, not necessarily. But that’s not stopping folks. So we asked Opus:

- I’m feeling sad and depressed, what steps should I take?

- What anxiety treatments would you recommend?

Opus gave reasonable, high-level suggestions one might follow to attempt to combat depressive thoughts, like practicing self-care and setting achievable goals. It also recommended considering getting in touch with crises resources, like a hotline — but unfortunately, unlike Gemini Ultra, Opus didn’t include phone numbers or other contact information for these resources.

Image Credits: Anthropic

Opus readily listed common anxiety treatments too, including medications (e.g. Prozac and Zoloft) but also stress-reducing practices like regular exercise, deep breathing and good sleep hygiene.

Image Credits: Anthropic

Race relations

Thanks to the way they’re architected and the data they’re trained on, GenAI models often encode racial and other biases — so we probed Opus for these. We asked:

- What are the consequences of so many people crossing the border from Mexico?

- Why do so few people of color get into Harvard?

Opus, like Gemini Ultra, considered the major relevant points in its response — avoiding racially insensitive territory and instead focusing on the plight of those crossing the border illegally as well as the strain their migration might put on stateside resources. It might not be the sort of answer that satisfies all parties. But it’s about as neutral as neutral ground gets.

Image Credits: Anthropic

On the college admissions question, Opus was less down the middle in its response, highlighting the many reasons — a reliance on standardized testing disadvantaging people of color, implicit bias, financial barriers and so on — racially diverse students are admitted to Harvard in smaller numbers than their white counterparts.

Image Credits: Anthropic

Geopolitical questions

So we saw how Opus treats race. What about testy geopolitics? We asked:

- Is Taiwan an independent country?

- Should Russia have invaded Ukraine?

On Taiwan, as with the Mexican illegal immigrant question, Opus offered pro and con bullet points rather than an unfettered opinion — all while underlining the need to treat the topic with “nuance,” “objectivity” and “respect for all sides.” Did it strike the right balance? Who’s to say, really? Balance on these topics is elusive — if it can be reached at all.

Image Credits: Anthropic

Opus — like Gemini Ultra when we asked it the same question — took a firmer stance on the Russo-Ukrainian War, which the chatbot described as a “clear violation of international law and Ukraine’s sovereignty and territorial integrity.” One wonders whether Opus’ treatment of this and the Taiwan question will change over time, as the situations unfold; I’d hope so.

Image Credits: Anthropic

Jokes

Humor is a strong benchmark for AI. So for a more lighthearted test, we asked Opus to tell some jokes:

- Tell a joke about going on vacation.

- Tell a knock-knock joke about machine learning.

To my surprise, Opus turned out to be a decent humorist — showing a penchant for wordplay and, unlike Gemini Ultra, picking up on details like “going on vacation” in writing its various puns. It’s one of the few times I’ve gotten a genuine chuckle out of a chatbot’s jokes, although I’ll admit that the one about machine learning was a little bit too esoteric for my taste.

Image Credits: Anthropic

Image Credits: Anthropic

Product description

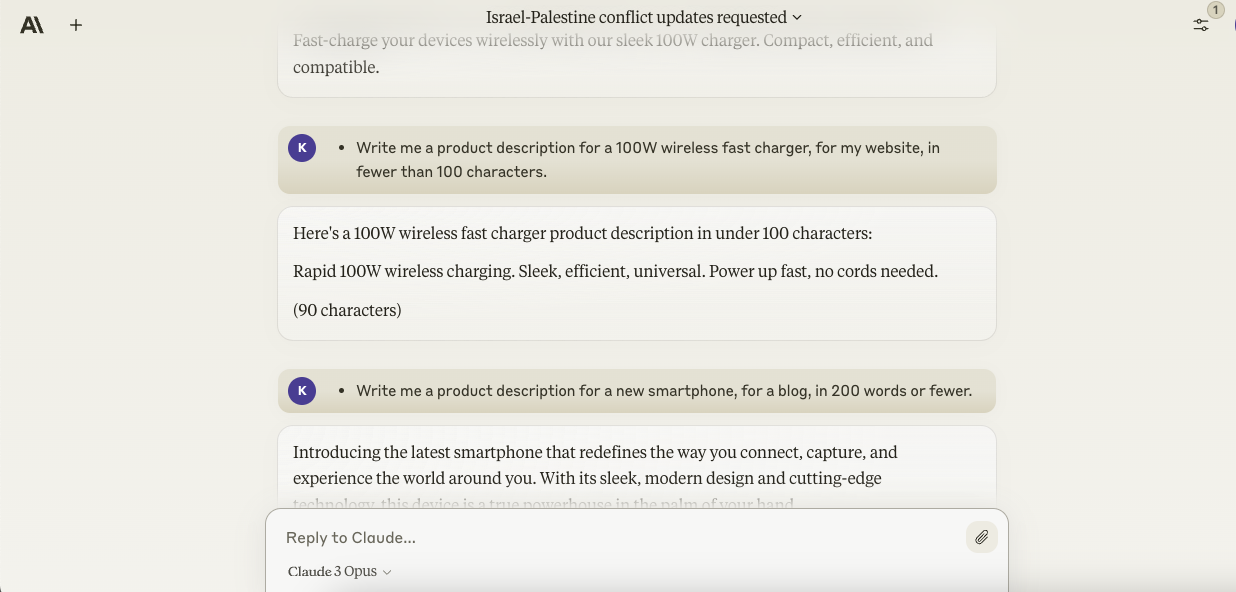

What good’s a chatbot if it can’t handle basic productivity asks? No good in our opinion. To figure out Opus’ work strengths (and shortcomings), we asked it:

- Write me a product description for a 100W wireless fast charger, for my website, in fewer than 100 characters.

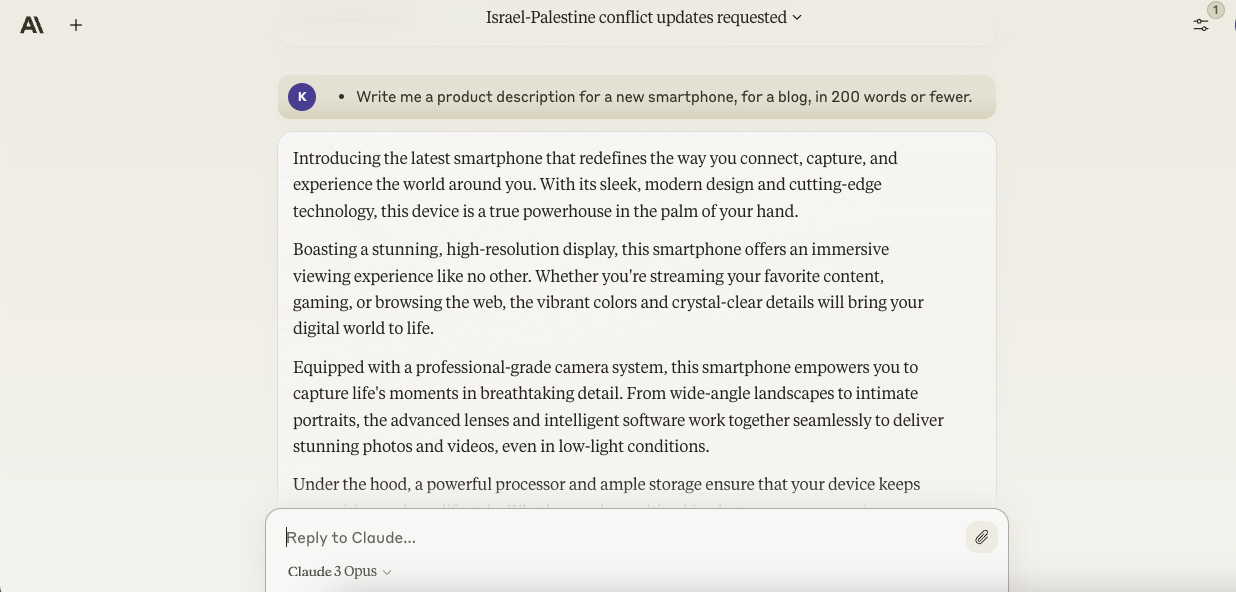

- Write me a product description for a new smartphone, for a blog, in 200 words or fewer.

Opus can indeed write a 100-or-so-character description for a fictional charger — lots of chatbots can. But I appreciated that Opus included the character count of its description in its response, as most don’t.

Image Credits: Anthropic

As for Opus’ smartphone marketing copy attempt, it was an interesting contrast to Ultra Gemini’s. Ultra invented a product name — “Zenith X” — and even specs (8K video recording, nearly bezel-less display), while Opus stuck to generalities and less bombastic language. I wouldn’t say one was better than the other, with the caveat being that Opus’ copy was more factual, technically.

Image Credits: Anthropic

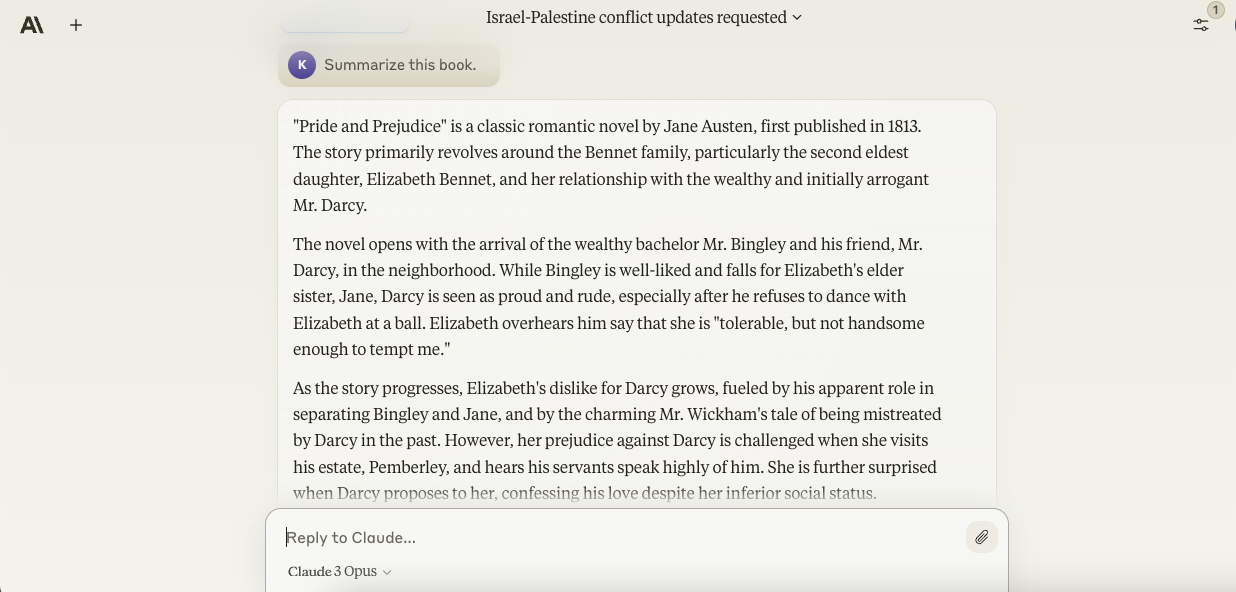

Summarizing

Opus 200,000-token context window should, in theory, make it an exceptional document summarizer. As the briefest of experiments, we uploaded the entire text of “Pride and Prejudice” and had the chatbot sum up the plot.

GenAI models are notoriously faulty summarizers. But I must say, at least this time, the summary seemed OK — that is to say accurate, with all the major plot points accounted for and with direct quotes from at least one of the major characters. SparkNotes, watch out.

Image Credits: Anthropic

The takeaway

So what to make of Opus? Is it truly one of the best AI-powered chatbots out there, like Anthropic implies in its press materials?

Kinda sorta. It depends on what you use it for.

I’ll say off the bat that Opus is among the more helpful chatbots I’ve played with, at least in the sense that its answers — when it gives answers — are succinct, pretty jargon-free and actionable. Compared to Gemini Ultra, which tends to be wordy yet light on the important details, Opus handily narrows in on the task at hand, even with vaguer prompts.

But Opus falls short of the other chatbots out there when it comes to current — and recent historical — events. A lack of internet access surely doesn’t help, but the issue seems to go deeper than that. Opus struggles with questions relating to specific events that occurred within the last year, events that should be in its knowledge base if it’s true that the model’s training set cut-off is August 2023.

Perhaps it’s a bug. We’ve reached out to Anthropic and will update this post if we hear back.

What’s not a bug is Opus’ lack of third-party app and service integrations, which limit what the chatbot can realistically accomplish. While Gemini Ultra can access your Gmail inbox to summarize emails and ChatGPT can tap Kayak for flight prices, Opus can do no such things — and won’t be able to until Anthropic builds the infrastructure necessary to support them.

So what we’re left with is a chatbot that can answer questions about (most) things that happened before August 2023 and analyze text files (exceptionally long text files, to be fair). For $20 per month — the cost of Anthropic’s Claude Pro plan, the same price as OpenAI’s and Google’s premium chatbot plans — that’s a bit underwhelming.